“We cannot think of new musical instruments anymore without machine learning.” (Guest Lecturer Laetitia Sunami)

Wekinator

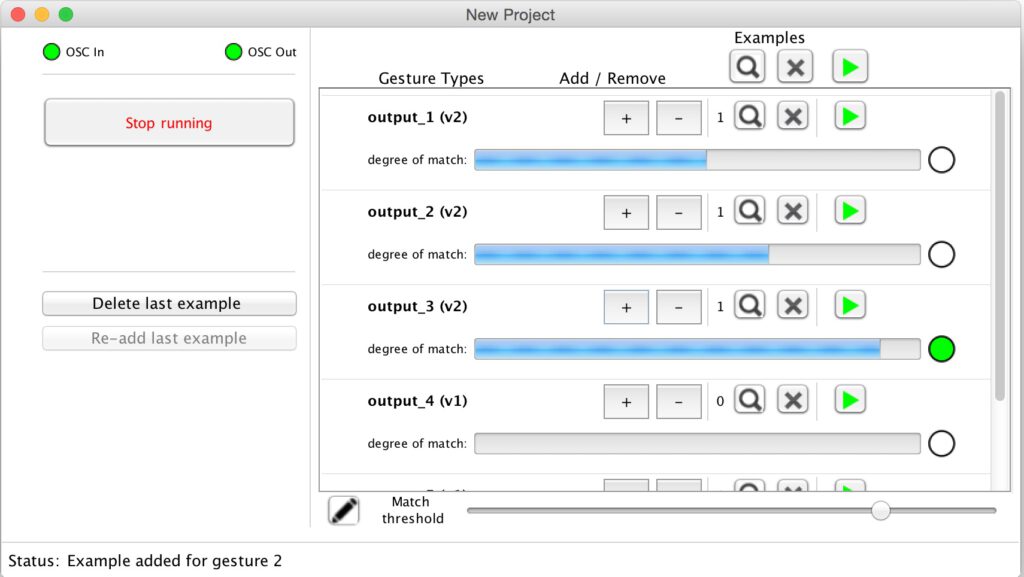

“Machine Learning for Musicians and Artists” is a course hosted on Kadenze.com, taught by Dr. Rebecca Fiebrink. Fiebrink created a tool called Wekinator in 2009, as part of her Ph.D. thesis.  Wekinator takes the Weka Machine Learning toolkit for Java into an artist friendly GUI software. You connect it through OSC as a middleware between any form of Input and Output, often MAX/MSP or Processing patches, and is the tool central to this course.

Wekinator takes the Weka Machine Learning toolkit for Java into an artist friendly GUI software. You connect it through OSC as a middleware between any form of Input and Output, often MAX/MSP or Processing patches, and is the tool central to this course.

If you became curious of Machine Learning because you have seen the amazing GAN image generators or the vast progress in Natural Language Processing from the recent years, you’ll be surprised to not find anything like that in this course. Weka and with it Wekinator is essentially technology from the 90’s: it can do classification (k-Nearest Neighbour and its siblings), regression (linear and polynomial), dynamic time warping, etc.;… – but not any of the recent deep learning models. However, I found it a great method to learn the foundations of Machine Learning applied to the familiar environments of theatre and music.

Feature Extraction

This course made me understand not only basic terminology like regression and cost function (and thus proved to be very effective for the communication with specialists from the field) but most of all taught me to think closely about feature extraction. The now classic Machine Learning methods from the 80’s and 90’s (and with it Wekinator) need the human to extract meaningful features from the raw data to turn the original complex problem into a trivial classification or regression problem. Although Deep Learning promises to automate the extraction of features the course taught me how to tackle a Machine Learning task in very fundamental ways. The difference between Machine Learning and Deep Learning in terms of feature extraction and necessary human input is described very well in this lecture by Lex Fridman in which he teaches that Deep Learning is in fact Representation Learning:

Rapid Interaction Prototyping

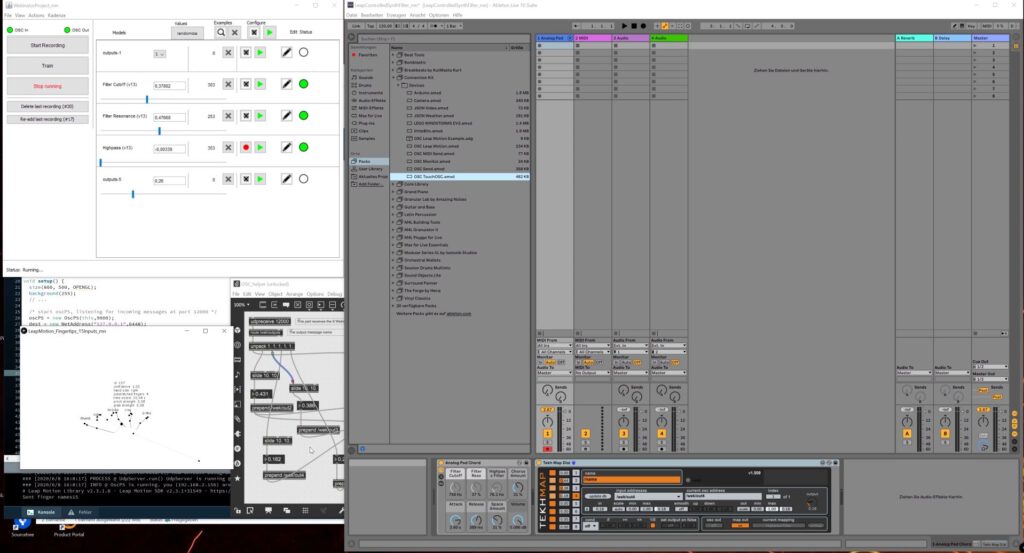

As said, the course doesn’t adress computer-generated art or the like but it adresses a very fundamental problem everybody who is into live-electronics or interactive systems is running into: how to speed up the process of mapping any kind of input data to the desired output result? Say, you’re working with a Leap Motion hand tracking controller. This device outputs a ton of data: for example, the x, y, z coordinates of every single joint of every single finger to begin with. Mapping each data vector to a parameter by hand won’t get you anywhere quickly.

With Machine Learning it goes like this: you hold you hand in a position, select an ouput value in Wekinator which you want to relate to this hand position, hit the “record” button in Wekinator which will record data points of the Controller data, repeat that procedure with other hand positions and other corresponding output values, then train the model and run it. Often that was it already. Move your hand and hear the values changing the way you imagined it. Takes just a couple of moments. A really amazing tool for rapid prototyping,

Inspiration and Understanding

In this regard, the course proved to be really inspiring. For a final project, of course, one needs plenty more of fine development and optimization, but that’s to be expected everywhere. The course is a good intro if you’re new to the area and interested to see how simpler ML algorithms can be related to music and performance art.

Featured Image stolen from POSTHUMAN FEMINIST AI, obviously showing detections from FaceOSC which you also could use as an input to Wekinator.